Criterion Validity | Definition, Types & Examples

- Introduction

- What is criterion validity?

- Why is criterion validity important?

- Types of criterion validity

- Other types of validity

- Establishing criterion validity

Introduction

Criterion validity is a key concept in research methodology, ensuring that a quantitative test or measurement accurately reflects the intended outcome. This form of validity is essential for evaluating the effectiveness of various assessments and tools across different fields.

By comparing a new measure to outcomes from an established test, researchers can determine how well the new measure predicts or correlates with the criterion outcome. Understanding criterion validity helps researchers design a new valid measure and conduct robust research.

This article outlines the definition, types, and examples of criterion validity, providing a clear and concise guide for researchers and students alike.

What is criterion validity?

Criterion validity refers to the extent to which a measurement or test accurately predicts or correlates with an outcome based on an established criterion.

It is a critical aspect of evaluating the effectiveness of a new tool or assessment method. The primary goal of criterion validity is to determine whether the results of a new and validated measure align with the outcomes of previous measures.

There are two main ways to assess criterion validity: concurrent validity and predictive validity. Concurrent validity examines the correlation between the new measure and an established measure taken at the same time. This type of validity is useful when researchers need to validate a new test quickly, using existing, reliable data.

Predictive validity, on the other hand, assesses how well the new measure predicts future outcomes. This approach is particularly valuable in fields like psychology, education, and health, where predicting future performance or behavior is important.

For instance, in educational testing, a new reading comprehension test may be evaluated for criterion validity by comparing its results with those of an established test known to be reliable. If the new test's scores closely match the established test's scores, it demonstrates high concurrent validity. Alternatively, if the new test can accurately predict students' future academic performance, it exhibits high predictive validity.

Establishing criterion validity is essential for ensuring that a measurement tool is both accurate and reliable. Without it, the results of a study or assessment may be questionable, leading to incorrect conclusions or ineffective interventions.

Therefore, understanding and applying criterion validity is fundamental in research to develop robust and credible measurement instruments.

Why is criterion validity important?

Criterion validity ensures the accuracy and reliability of measurement tools used in research. By confirming that a new measure accurately reflects or predicts an established criterion, researchers can trust the results and conclusions drawn from their studies.

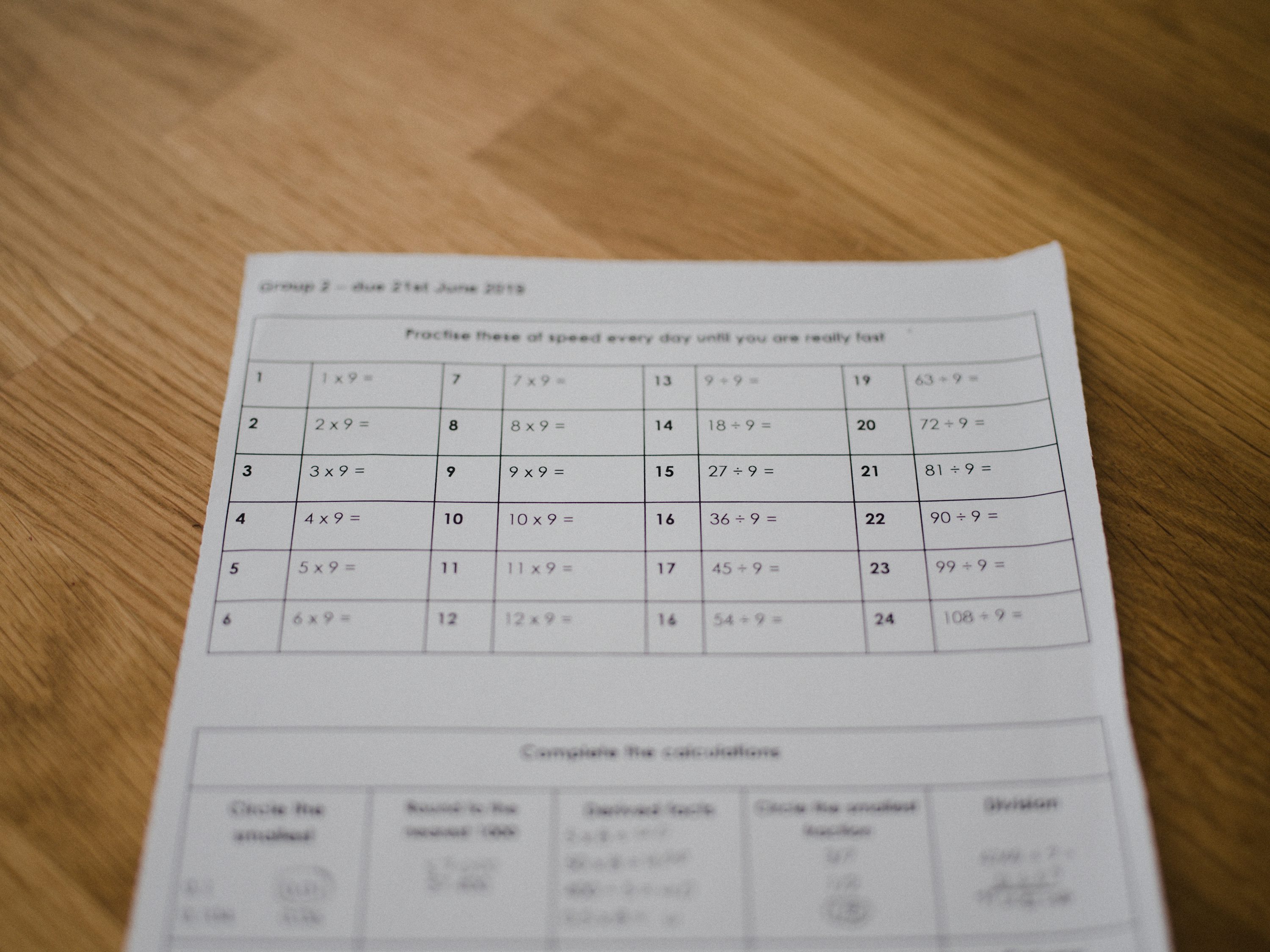

One primary reason criterion validity is important is that it helps verify the effectiveness of new assessment tools. For instance, in educational settings, developing a new test for measuring students' mathematical abilities requires validation against a trusted, established test. If the new test demonstrates high criterion validity, educators can confidently use it to assess and improve students' skills.

In clinical psychology, criterion validity is key to diagnosing and predicting outcomes. A new diagnostic tool for depression, for example, must be validated against existing, reliable diagnostic criteria.

High criterion validity ensures that the new tool accurately identifies individuals with depression and predicts their future mental health outcomes. This validation is essential for providing effective treatment and support.

Criterion validity also plays a significant role in employment and organizational settings. For example, when developing a new job performance assessment, it is important to validate it against current performance measures.

High criterion validity indicates that the new assessment accurately predicts job performance, aiding in effective hiring and employee development decisions.

Moreover, criterion validity contributes to the persuasiveness of research findings. When a measure demonstrates strong criterion validity, researchers can apply the findings across different contexts and populations with greater confidence. This broader applicability enhances the impact and utility of research.

Types of criterion validity

Criterion-related validity is divided into several types, each serving a distinct purpose in evaluating how well a new measure correlates with or predicts an established outcome.

The primary types of criterion validity are concurrent validity, predictive validity, convergent validity, and discriminant validity. Each type provides a different perspective on the effectiveness and reliability of a measurement tool.

Concurrent validity

Concurrent criterion validity assesses the extent to which a new measure correlates with an established measure taken at the same time. This type of validity is particularly useful when researchers need to validate a new tool quickly using existing, reliable data.

By comparing the results of the new measure with those of a well-established criterion measure administered concurrently, researchers can determine if the new measure produces similar outcomes.

For example, in the context of educational testing, if a new reading comprehension test is administered alongside a well-validated reading test, and the scores from both tests show a strong correlation, the new test is said to have high concurrent validity. This indicates that the new test is effective in measuring the same construct as the established test.

Predictive validity

Predictive criterion validity evaluates how well a new measure predicts future outcomes based on an established criterion. This type of validity is essential in fields where forecasting future performance or behavior is critical, such as psychology, education, and healthcare.

By demonstrating predictive validity, researchers can show that their new measure is not only reliable in the present but also useful for making accurate predictions about future events or behaviors.

For instance, a new aptitude test designed to predict students' success in college might be validated by comparing its scores to students' future academic performance. If the test scores accurately predict how well students will perform in their college courses, the test exhibits high predictive validity. This type of validity is essential for creating tools that aid in long-term planning and decision-making.

Convergent validity

Convergent validity is a subtype of criterion validity that examines whether a measure correlates well with other measures of the same construct. High convergent validity indicates that the new measure is consistent with other established measures that assess the same concept.

This type of validity is essential for ensuring that different tools intended to measure the same construct produce similar results, thereby supporting the robustness and credibility of the new measure.

For example, if a new scale for measuring anxiety levels shows a high correlation with existing, validated anxiety scales, it demonstrates high convergent validity. This consistency across different measures provides confidence that the new scale is accurately assessing anxiety.

Discriminant validity

Discriminant validity, another subtype of criterion validity, evaluates whether a measure does not correlate with measures of different constructs. High discriminant validity ensures that the new measure is distinct and not merely reflecting other unrelated constructs.

This type of validity is important for establishing the uniqueness of a new measure and confirming that it is not inadvertently assessing something else.

For instance, if a new test designed to measure depression shows low correlation with measures of unrelated constructs like intelligence or physical health, it has high discriminant validity. This demonstrates that the new test specifically assesses depression without being confounded by other relevant criterion variables.

Other types of validity

In addition to criterion validity, several other types of validity are essential for evaluating the accuracy and reliability of measurement tools. These include construct validity, face validity, and content validity. Each type of validity addresses different aspects of how well a test or instrument measures the intended construct.

Construct validity

Construct validity refers to the extent to which a test or instrument accurately measures the theoretical construct it is intended to measure. It involves both convergent and discriminant validity, as it assesses whether the test correlates well with other measures of the same construct (convergent validity) and does not correlate with measures of different constructs that should not be related (discriminant validity). Establishing construct validity is important for ensuring that the test truly reflects the underlying theoretical concept.

For example, a new intelligence test should demonstrate construct validity by correlating well with other established intelligence tests (convergent validity) and not correlating with unrelated constructs like personality traits (discriminant validity). High construct validity ensures that the test is a meaningful measure of intelligence.

Face validity

Face validity is the extent to which a test appears to measure what it claims to measure, based on subjective judgment. Unlike other forms of validity, face validity does not involve statistical analysis but rather relies on the assessment of experts or stakeholders.

Although face validity does not rely on statistical validation, it is important for ensuring that the test is acceptable to those who use or take it.

For instance, a customer satisfaction survey should have items that clearly relate to customer experiences and perceptions. If the survey items are straightforward and relevant, the survey is said to have high face validity. While not a statistically based measure, face validity helps ensure that the test is perceived as relevant and appropriate by its users.

Content validity

Content validity assesses whether a test adequately covers the entire range of the construct it aims to measure.

This type of validity involves a thorough examination of the test items to ensure they represent all aspects of the construct. Content validity is particularly important in educational and psychological testing, where comprehensive coverage of the subject matter is essential.

For example, a math proficiency test should include items that cover all relevant areas of mathematics, such as algebra, geometry, and arithmetic. High content validity ensures that the test provides a complete and accurate assessment of the construct.

Establishing criterion validity

Criterion validity requires demonstrating that a new measurement tool or test accurately reflects or predicts an outcome based on an established criterion. This process requires rigorous methods to ensure the new measure is both reliable and valid.

The following subsections outline the key steps in establishing criterion validity.

Selecting appropriate criteria

The first step in establishing criterion validity is to select appropriate criteria against which the new measure will be evaluated. The chosen criteria should be well-established, reliable, and relevant to the construct being measured.

For example, if a new test is designed to assess academic performance, the criterion measure could be students' grades or scores from a widely accepted standardized test. The selected criterion should accurately reflect the construct to ensure a meaningful comparison.

Criterion validity testing

Criterion validity testing involves comparing the new measure with the chosen criterion measure to evaluate their relationship. This process typically includes conducting correlational studies to determine the strength and direction of the relationship between the two measures.

A high correlation indicates that the new measure has strong criterion validity, suggesting it accurately reflects the criterion measure.

For example, to test the criterion validity of a new depression scale, researchers might administer both the new scale and an established depression inventory to the same group of participants.

By analyzing the correlation between the scores from both measures, researchers can assess the criterion validity of the new scale. Statistical techniques, such as Pearson's correlation coefficient, are commonly used to quantify the strength of the relationship.

Addressing potential challenges

Establishing criterion validity can present several challenges, such as finding suitable criterion measures and accounting for external factors that may influence the results. Researchers must carefully select criterion measures that are not only relevant but also free from biases and errors.

Additionally, external factors, such as participants' varying levels of motivation or environmental influences, can affect the accuracy of the criterion validity assessment.

To address these challenges, researchers should conduct thorough pilot testing and use multiple criterion measures when possible. Employing a range of statistical techniques can also help to control for external factors and provide a more robust evaluation of criterion validity.