How to Analyze HTML Files for Research

Introduction

It's easy to take for granted how much information the Internet has inundated us with. Each and every page or file on each and every website is a node in a large network that researchers have only just begun to analyze for qualitative research inquiries in marketing, communication, sociology, and a whole host of other fields.

So what does researching HTML files and sites on the Internet entail? In this article, we'll look at research that explores not just the text found on the Internet but also the nature of information in digital literacies, laying the foundation for discussing how researchers can conduct data analysis on online resources.

Conducting research on web pages

There's long been something fundamentally different between traditional texts and HTML files. Imagine reading a book or an encyclopedia set. You can browse through either text, page by page, or flip to the most relevant place in the text to get the answer you're looking for. You could be looking through hundreds or even thousands of pages, oftentimes more than you might be able to fit in your backpack or a work table!

A website or a network of websites is built differently, changing the very nature of how people find information or conduct analysis. Links connect pages together, meaning readers can jump from one text to another easily and more quickly than if they were poring over stacks of books or other paper publications. The power of this medium has since become apparent with the advent of search engines and chatbots where the retrieval of information is nearly instantaneous and effortless.

Moreover, the Internet is more than just a collection of texts. While web pages were initially designed simply to store records or information, websites have become far more sophisticated, serving as message boards, social media platforms, file sharing services, and even online games.

The advent of digital literacies spurred on by the Internet has been a significant development for qualitative research, not just in the breadth and depth of information made more easily available, but also in the way people communicate and share information with each other. Rather than treat websites as simply digital versions of books or other texts, researchers should look at the Internet as a whole different medium of communication.

Of course, researchers can simply use HTML pages as a source of text for their qualitative research. In that case, traditional approaches such as thematic analysis, grounded theory, discourse analysis, and content analysis are useful methods for drawing insights from textual data, particularly if you are looking to understand the meaning of online texts.

However, there are countless other avenues to research when it comes to analyzing websites. Think about how a website links to another, or how one page connects to other pages. Unlike with traditional media, a link connects sources of information and platforms together, changing the behavior of users who would otherwise browse through texts to identify the answers they're looking for.

This creates a whole new language for understanding how people communicate knowledge. For example, a blog article or a social media post might "go viral" when a significant number of users link to the same source at once. The nature of this virality invites a number of interesting research questions in research fields like sociology, marketing, and communication.

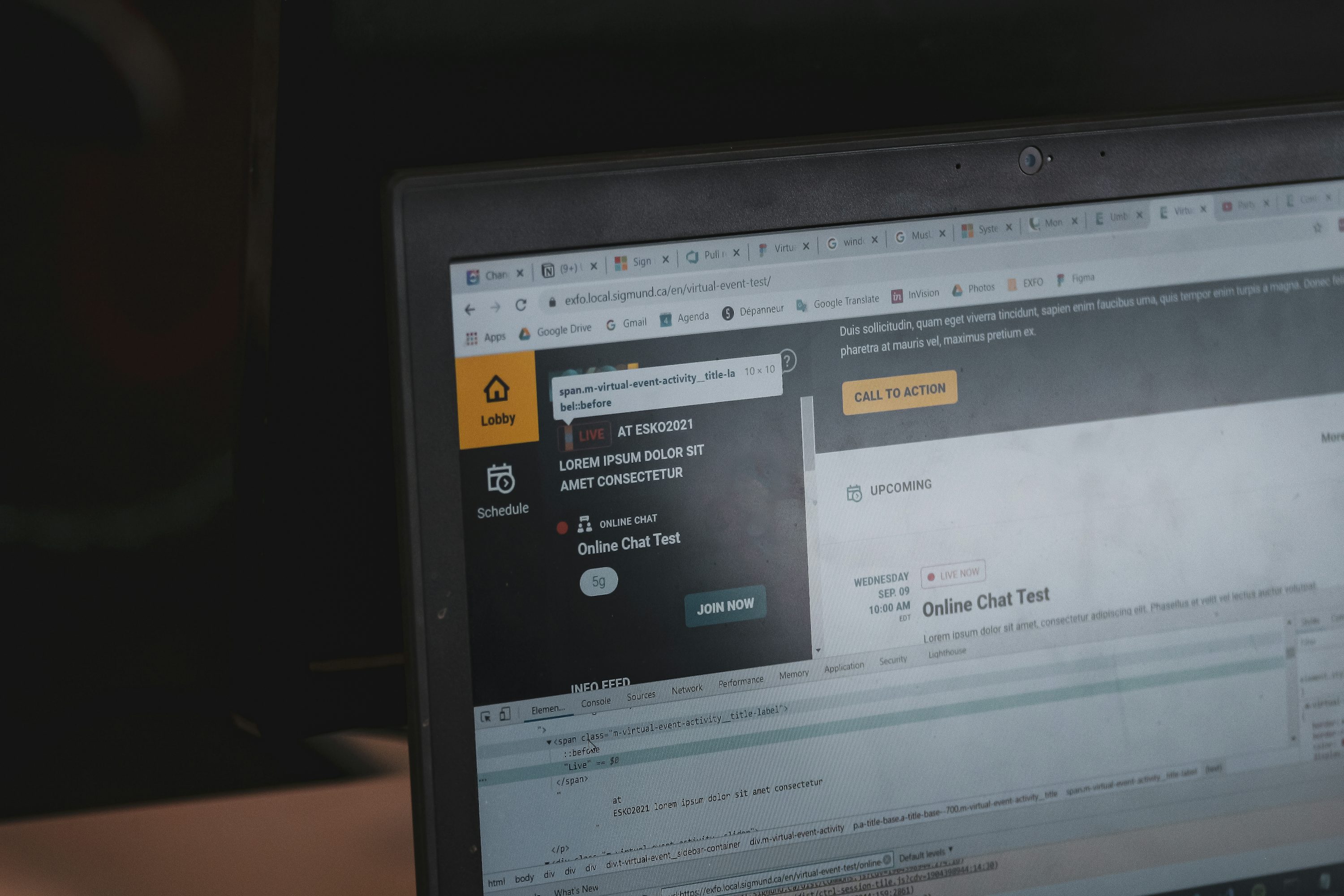

Website design also invites other questions for research. For example, how and why are websites tailored for a certain web browser over others? What makes for a "good" website design, especially with the increasing use of smartphones to search the Internet? Conducting analysis to address these inquiries requires collecting not only textual data, but data from multimedia in order to capture the full experience of browsing a website.

How to analyze a web page or HTML document

The most obvious considerations for researching websites involve issues of data collection and data organization. Conducting qualitative research efficiently often depends on making sure the salient parts of the data are captured in detail and then organized in a manner that allows for the categorization and division of data into discrete units of analysis.

Data collection from websites requires careful consideration of what you are looking to examine for your study. If you're simply looking for an analysis of text, then it's a matter of copying and pasting text from a web page, post, or other form of input. But if your research question has more to do with how information is transmitted or how people use them, then your research design requires further development to include non-textual data such as visual and audio elements.

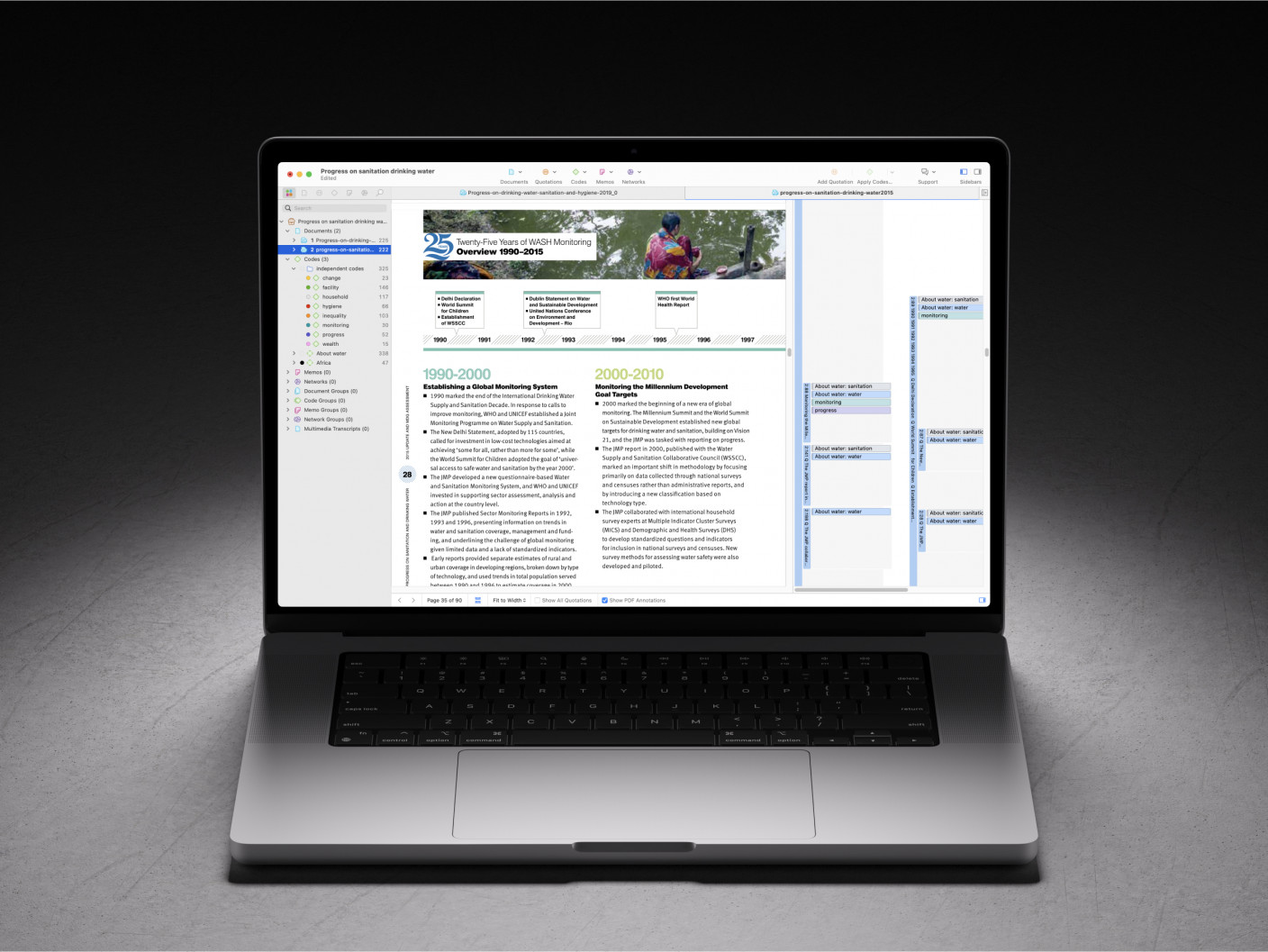

To that end, capturing data from HTML pages might require a screen capture tool or a PDF printing capability to document the design elements of websites. Most modern browsers give users the option to convert HTML pages to PDF files which qualitative data analysis software, such as ATLAS.ti, can then analyze. Other tools are also available to allow users to download videos or audio files from video sharing websites or social media platforms such as Twitter/X, Instagram, and TikTok.

Keep in mind, however, that if you want to conduct a document analysis on social media data, then it might benefit you to either treat each post as its own document or code the data in a way that allows you to identify the author or context of each post. In addition, you might consider analyzing the comments on a selected social media post, in which case you could download all the comments and import them into a qualitative data analysis software, such as ATLAS.ti. Whatever data you are looking to capture, it is important to be able to categorize the data by cohesive units of analysis to facilitate qualitative coding later on.

As with any qualitative data, researchers should also address the ethical considerations of taking data from any website. Especially when you're working with audio or video data, there are issues of copyright and intellectual property that protect creators' investment of time, money, and effort in posting to or developing a website. There are also issues of handling personal or confidential information so that they aren't used unfairly. To that end, make sure to anonymize any personal information and limit the dissemination of raw data from websites to mitigate any copyright considerations while preserving the essence of the data you are trying to capture. Doing so prior to data analysis can save a lot of time addressing any obstacles to research publication raised by complaints of the original authors.